HOW WILL LOD EXPRESSIONS AFFECT THE PERFORMANCE IN TABLEAU?

With Tableau 9.0, a new type of expression called Level of Detail (LOD) was introduced. LOD expressions provide a way to easily compute aggregations that are not at the level of detail of the visualization. LOD is a breakthrough in the area of calculations. The LODs have introduced a new thought process which provides a convenient and easy technique to solve many complex requirements, which were previously a pain.

There are many articles explaining what LOD expressions are and how one can use them in calculations. For more details about LOD read here.

Since LOD expressions provide a way to easily compute aggregations that are not at the level of detail of the visualization, I was curious to know how Tableau was handling such calculations in the background. It was not at all surprising when I found that Tableau was doing it using the “sub queries” which were actually executed by the underlying database. To know more about how Tableau is constructing these sub queries, you can use the Tableau Performance Recorder.

Because sub queries can be very tricky at times, the first question that comes to my mind is, “What will happen to the performance?” The general perception is that executing sub queries along with regular queries might degrade the performance to a level which will not be acceptable.

So now one might be wondering if one should really use the LOD expressions or not. I conducted a very simple test to better understand this.

Putting LOD Expressions to the Test

For the test I considered a very basic use case using the Superstore Sales database. I created a big text table with multiple dimensions and displayed the sales values. I also wanted to calculate the “% of Sales” along the entire the table.

Prior to LOD expressions this use case would have been solved using the Table Calculations. Hence my test included both scenarios, i.e., that with the LOD expressions and that with the Table Calculations. I also made sure that in both scenarios the visualisation (# of fields and positions) remained the same. The test was conducted on a live database connection as well as on the Tableau Data extract.

The following are the details of the test:

- The test used a table in SQL Server 2014 database of approximately 5 million records.

- While Tableau connected to this table, a data extract was also generated.

- A Tableau worksheet, which was a text report, was created with multiple fields along with a Table Calculation to determine the “% of Total Sales” for each of the values displayed in the text table. The following function was written:

- The above worksheet was duplicated and instead of using a Table Calculation, an LOD expression was used to calculate the “% of Sales” for the entire table. The following functions were used:

- The difference between these two Tableau worksheets: To calculate the “Total sales,” one sheet used a Table Calculation and the other sheet used an LOD expression.

- Both of the Tableau worksheets were text reports with approximately 160 K marks.

- Both of the Tableau worksheets were executed against the live connection to SQL Server database as well as the Tableau data extract.

The observations captured using the Performance Recorder tool are outlined below:

Test 1: Live Connection + Table Calculation

Timeline (Approximate Total Elapsed Time: 60 Seconds) |

- The SQL Server was slow in “Executing the query,” which consumed most of the time.

- Being a big text table (160K marks), “Computing layout” also took significant amount of time.

- “Computing table calculations” and “Computing totals” (Total Sales and % of total) also took a significant amount of time on the big text table.

Test 2: Live Connection + LOD Expression

Timeline (Approximate Total Elapsed Time: 48 Seconds) |

- The SQL Server was slow in “Executing the query”, which consumed most of the time. There are multiple queries due to the LOD expressions, however the queries ran in parallel.

- A sepearte Query was generated for LOD which also took a significant amount of time.

- Being a big text table (160K marks), “Computing layout” also took significant amount of time.

- No “Table calculations” were performed – the requirement was achieved through the LOD.

Test 3: Data Extract + Table Calculation

| Timeline (Approximate Total Elapsed Time: 44 Seconds) |

|

- The extract is designed for faster query response, thus far less time was consumed for the execution of the query.

- Being a big text table (160K marks), “Computing layout” also took significant amount of time.

- “Table calculations” and “Computing totals” (Total Sales and % of total) also took significant amount of time on the big text table.

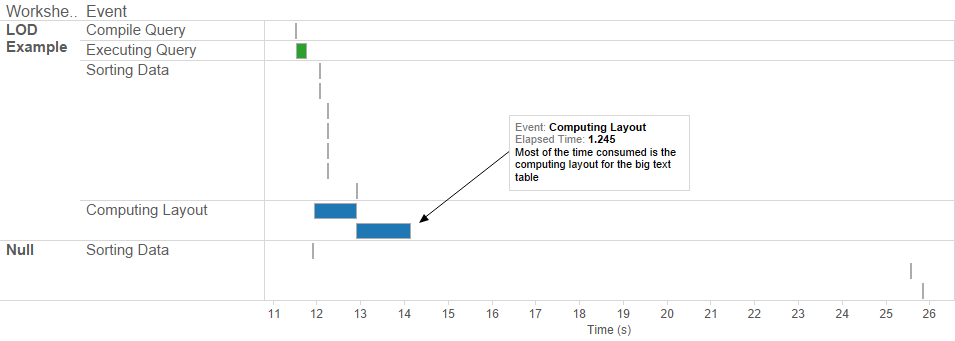

Test 4: Data Extract + LOD Expression

Timeline (Approximate Total Elapsed Time: 14 Seconds) |

- The extract is desgned for faster query response, thus far less time was consumed for the execution of query.

- Being a big text table (160K marks), “Computing layout” also took significant amount of time.

- No “Table calcuations” were performed – the requirement was achieved through the LOD.

Summary

There are three categories to be considered:

- Executing Queries – With live connection, the SQL Server would execute the queries and with extract mode, the Data Extract (TDE) would execute the queries.

- Calculations – With LOD, the database (Live or TDE) would perform the calculations and with Table Calculations, Tableau (VizQL Component) would perform the calculations.

- Computing Layouts – Irrespective of the database mode (Live or TDE) or calculation mode (LOD or Table Calculations) this process is responsible for preparing the image tiles to build the final presentation (output text table). This is executed by the VizQL component after the queries are executed and calculations are performed.

Conclusion

To conclude further let us ignore the third component which is “Computing Layouts” and focus on “Executing Queries” and “Calculations”.

Database

It is clearly evident that LOD expressions do not directly affect the performance, and instead there are other factors involved. If the database in consideration is a super-fast database (Live or Tableau data extract) and intelligent enough to perform parallel queries, then introduction to LOD would be absolutely fine. If the database (Live or Tableau data extract) is not optimized for performance then you would have an issue with the regular queries and, in this case, it would be unfair to say that the slow performance is due to LOD.

Table Calculation

The VizQL component of Tableau performs these calculations, and it is not fair to compare this to a database. VizQL is simply helping us with some calculations at the client level rather than sending it back to the database, and the performance will depend on the number of “marks” being displayed in the chart or table. If the number of marks is too great then VizQL will be under tremendous load.

Hence the LOD must be used if we are sure that the database is capable of handling sub queries intelligently. If the database is not optimized for the same, then we can consider alternatives like Table Calculations, however these will work efficiently with fewer marks. There may be limitations with Table Calculations as well since, unlike LOD, they cannot compute aggregations that are not at the level of detail of the visualization.

This Tableau Tip was written by:

Sourabh Dasgupta

Sourabh Dasgupta is the Director of Technology & BI at Corporate Renaissance Group India and is also the Product Manager of CRG’s in-house software products such as FlexABM and Cost Allocator. Over the past 14 years he has been involved as a technical expert in various projects and assignments related to implementation of Activity Based Costing and Business Intelligence and reporting systems in India as well as South Africa.

He is a one of the professional trainers for Tableau software and has conducted many trainings for the clients. He has been involved in designing and development of Tableau reports and dashboards, and was awarded with a Tableau Desktop 8 Qualified Associate certificate as well as a Tableau Server 8 Qualified Associate certificate. He has successfully conducted trainings on the Tableau Software (Desktop and Server versions) for many clients, which included basic and advanced features of the Tableau Desktop and Tableau Server.

Sourabh has a Bachelor of Science degree (Computer Science, Physics, and Mathematics) from Nagpur University, India and a Diploma from the National Institute of Information Technology (NIIT), Pune, India. He also holds a Microsoft Certified Professional certificate for Designing and Implementing Desktop Applications with Microsoft Visual basic.

If you wish to accelerate your skills and empower your learning with hands-on education, or are seeking an Expert-on-Demand, reach out to Sourabh at sdasgupta@crgroup.com

Leave a Comments

You must be logged in to post a comment.